Over the last two years, Generative AI has moved from isolated experimentation to the center of enterprise strategy discussions. What began as curiosity within innovation teams has quickly become a board-level priority, with organizations under pressure to define a clear AI roadmap, demonstrate progress on AI adoption, and articulate how AI will shape long-term competitiveness.

Yet beneath this urgency sits a quieter reality. While interest in AI adoption has surged, successful AI implementation at enterprise scale remains uneven. Many organizations invest heavily in pilots, platforms, and tooling, only to discover that meaningful AI transformation remains elusive. Early use cases show promise, demos impress stakeholders, and momentum builds briefly, but sustained impact fails to materialize.

This gap between ambition and outcome is not accidental. It is structural.

AI adoption is often treated as a technology initiative. In practice, it is a readiness problem. Organizations move forward assuming they are prepared, without fully understanding whether their strategy, governance, data, operating model, and culture can absorb what AI introduces. The GenAI Readiness Canvas exists to surface that reality before it becomes an expensive lesson.

Optional : For a deeper perspective on how AI is reshaping engineering operating models beyond tooling and productivity gains, we explore this shift in detail in our analysis on AI in software development.

Why AI Adoption Struggles Inside Enterprises

When AI adoption struggles inside enterprises, the failure rarely announces itself. There is no single moment where leadership formally concludes that an initiative has failed. Instead, progress dissipates gradually. Pilots continue running beyond their intended scope, producing outputs but never expanding into core workflows. Use cases remain confined to small pockets of the organization, disconnected from enterprise decision-making. Over time, confidence erodes quietly as expectations and outcomes drift further apart.

What makes this pattern particularly difficult to detect is that, on the surface, everything appears functional. Infrastructure investments are in place. Data platforms exist. Vendors are selected. Budgets continue to be allocated. From a distance, AI implementation appears to be moving forward.

Internally, however, a more fragile reality emerges. As AI systems encounter real operational complexity, long-standing organizational misalignments begin to surface. Data definitions differ across functions. Ownership of AI-influenced decisions is unclear. Product teams optimize for speed and experimentation, while legal, security, and compliance teams optimize for certainty and risk reduction. These tensions are not new, but AI amplifies them in ways traditional software systems never did.

Unlike deterministic systems, AI magnifies ambiguity. Small inconsistencies that organizations previously managed through informal coordination now surface as systemic risk. When intelligence is embedded directly into workflows, unresolved questions around accountability, trust, and governance become blockers rather than inconveniences. This is where many Enterprise AI adoption efforts stall.

McKinsey has consistently highlighted this pattern, noting that while a majority of organizations invest in AI initiatives, only a small fraction succeeds in scaling them across the enterprise. The primary constraints are rarely technical capability. They stem from governance gaps, unclear ownership, and operating models that were never designed for intelligence-driven systems.

Leadership often misreads this moment. The instinct is to invest in better tools, stronger models, or additional training. In reality, the organization is encountering a readiness gap rather than a technology limitation. Without addressing that gap, additional investment accelerates friction rather than value.

The Readiness Question Leaders Rarely Ask

Before selecting platforms, approving use cases, or finalizing an AI deployment strategy, there is a more fundamental question leaders must confront: is the organization structurally ready for AI implementation at scale?

This question extends far beyond infrastructure readiness. It touches leadership alignment, governance discipline, data reliability, operating model design, and cultural preparedness. It determines whether an AI investment strategy becomes sustainable or fragmented. Yet in practice, this question is often skipped.

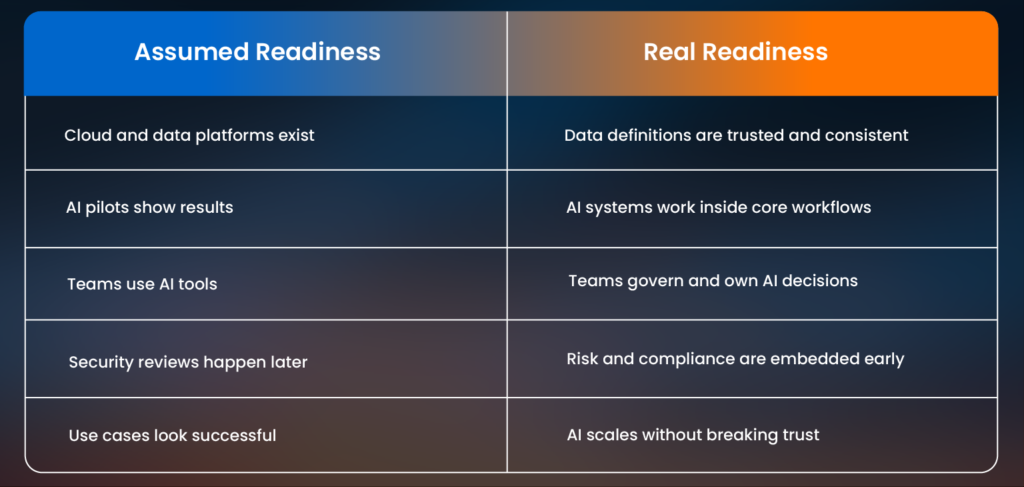

Many organizations assume readiness based on surface indicators. Cloud adoption is mature. Data platforms exist. Analytics teams are in place. From a traditional technology lens, these signals suggest preparedness. From an AI lens, they are insufficient.

Without a deliberate AI readiness assessment, AI roadmaps are built on assumptions rather than evidence. AI governance frameworks lag behind deployment. AI compliance risks surface only after systems are already live. Generative AI deployment moves faster than organizational controls can adapt. The result is not acceleration, but instability.

Why Enterprise GenAI Raises the Stakes

Enterprise GenAI fundamentally changes the readiness equation. Traditional AI systems were often narrow and constrained. Generative AI deployment introduces systems that are probabilistic, adaptive, and deeply embedded into decision-making workflows. Their behavior evolves over time. Their impact extends beyond performance into trust, ethics, and regulatory exposure.

As a result, GenAI strategy cannot be treated as a continuation of earlier AI efforts. GenAI deployment forces organizations to confront questions they may have postponed for years. How trustworthy is our data when used at scale? Who owns decisions influenced by AI-generated insights? How do we audit systems that learn continuously? How do we balance speed with accountability?

Gartner has emphasized that Generative AI introduces entirely new categories of operational and governance risk that traditional AI governance frameworks were not designed to handle. As organizations move from experimentation to Enterprise GenAI, readiness becomes less about tooling and more about control, accountability, and trust.

This is why many AI deployment challenges surface only at scale, long after pilots have been declared successful.

This readiness challenge becomes even clearer when viewed through the lens of how engineering, product, and architecture operate in AI-native environments, a transition we examine in our perspective on AI-native software development.

Why Readiness Must Be Paired with Risk Visibility

Readiness alone is not sufficient. Two organizations at the same maturity level may face very different exposure depending on where GenAI is applied.

For this reason, the GenAI Readiness Canvas pairs maturity assessment with a risk heatmap that translates readiness gaps into business-relevant risk categories, including:

- safety and hallucination risk

- compliance and regulatory exposure

- data leakage and privacy risk

- operational dependency risk

- trust and adoption risk

This risk-based view allows leaders to distinguish between:

- use cases that are safe to pilot,

- initiatives that require remediation before proceeding,

- and deployments that should not move forward at all.

The heatmap reframes AI decisions as risk-managed investments, not experimental bets. It enables leadership teams to decide where AI can responsibly accelerate and where restraint protects enterprise value.

Rethinking AI Readiness Assessment Beyond Checklists

In response to these challenges, many organizations attempt to formalize readiness through checklists and maturity scores. Infrastructure readiness. Data readiness. Security readiness. While useful at a surface level, these approaches often miss the deeper interdependencies that determine whether AI adoption succeeds.

True readiness is multidimensional. Strategy, governance, data, architecture, operating models, talent, risk, and execution do not operate independently. Weakness in one dimension undermines strength in others. Advanced models cannot compensate for fragmented ownership. Fast experimentation cannot overcome the absence of production discipline.

This realization led to the GenAI Readiness Canvas. Not as a diagnostic formality, but as a leadership lens designed to make readiness visible across interconnected dimensions.

What the GenAI Readiness Canvas Actually Assesses

Most discussions of AI readiness remain abstract. The GenAI Readiness Canvas was designed to make readiness concrete, observable, and actionable.

The Canvas evaluates readiness across eight interconnected dimensions that together determine whether an organization can deploy GenAI safely and at scale:

- Business Alignment – clarity of intent, ownership, and measurable outcomes

- Process Readiness – stability and consistency of workflows AI will augment

- Data Readiness – trust, accessibility, governance, and contextual integrity of data

- Technology Foundation – cloud, integration, and AI platform maturity

- AI Governance & Risk – decision ownership, model oversight, and safety controls

- Security & Compliance – regulatory alignment, access control, and data protection

- Talent & Skills – AI literacy across product, engineering, risk, and business teams

- Change Readiness – cultural preparedness to operate differently with AI embedded

These dimensions are intentionally evaluated together. Strength in one area cannot compensate for weakness in another. Advanced models cannot offset unclear ownership. Strong experimentation culture cannot replace production discipline. Readiness emerges only when these dimensions mature in balance.

The Canvas shifts readiness from opinion to evidence by forcing leadership teams to confront where gaps exist and how those gaps interact under real operational pressure.

The Maturity Illusion in AI Adoption

One of the most consistent insights from readiness work is how frequently organizations overestimate their maturity. Pilots, demos, and isolated successes create the illusion of progress. In reality, many enterprises remain in early stages where AI capability depends on individuals rather than systems.

Governance is informal. Operating models are inconsistent. Risk management is reactive. AI transformation appears active, yet remains fragile.

PwC research reflects this gap, showing that while most leaders believe their organizations are progressing toward AI maturity, very few have established scalable governance, repeatable execution models, or production-grade controls. This mismatch between perception and reality is where many AI adoption challenges quietly stall.

From Perceived Maturity to Measured Readiness

A core reason AI initiatives stall is the absence of a shared, objective definition of readiness. To address this, the GenAI Readiness Canvas uses a five-level maturity model to assess each dimension consistently across the enterprise.

- Level 1 – Unprepared:

AI experimentation exists, but foundational gaps create high risk. Governance, data trust, and ownership are largely absent. - Level 2 – Emerging:

Early pilots and tooling are in place, but efforts remain fragmented. Controls lag behind experimentation. - Level 3 – Developing:

Structured pilots are possible. Governance and operating discipline exist, but are not yet enterprise-grade. - Level 4 – Advanced:

GenAI systems operate reliably in production. Governance, monitoring, and accountability are embedded. - Level 5 – Scaled & Governed:

AI is part of the organization’s operating system, with continuous oversight, measurable impact, and low friction scaling.

This maturity model does more than label progress. It creates alignment between leadership, technology, and risk teams by making readiness explicit and comparable across functions. Most importantly, it prevents organizations from scaling AI faster than their controls can support.

From Readiness to a Sustainable AI Roadmap

A credible AI roadmap does not begin with use cases. It begins with stabilization. Data foundations must be reliable. Governance structures must be explicit. Ownership and accountability must be defined. Operating models must support both experimentation and production simultaneously.

Only once these foundations are in place does Generative AI deployment deliver durable value. Only then does AI implementation move beyond pilots. Only then does AI deployment strategy become predictable rather than aspirational.

This sequencing transforms AI investment strategy from experimentation into organizational capability.

How Readiness Translates into a Real AI Roadmap

A credible AI roadmap does not start with use case ideation. It starts with sequencing readiness improvements so AI investments compound rather than collapse under scale.

The GenAI Readiness Canvas structures this sequencing into four deliberate horizons:

- Stabilize:

Address critical gaps in governance, data trust, and decision ownership before expanding AI use. - Enable:

Build the minimum viable AI foundation—architecture, data pipelines, monitoring, and controls. - Pilot:

Validate high-value use cases under controlled conditions with clear success thresholds. - Scale:

Expand AI across the enterprise only once safety, trust, and accountability are proven.

This approach replaces reactive AI expansion with intentional capability building. It ensures that every new GenAI deployment strengthens the organization rather than introducing new fragility.

A Reality Seen Across Enterprises

In several large organizations, GenAI deployment initially succeeds within individual teams, producing impressive outputs and early productivity gains. However, as these systems are prepared for wider rollout, unresolved questions around data ownership, governance authority, and accountability quickly surface. What initially appears to be a technical scaling challenge often reveals itself as an operating model gap, one that was invisible during early experimentation but becomes unavoidable at enterprise scale.

This pattern is increasingly common across Enterprise AI adoption efforts.

NewVision’s POV

At NewVision, we approach AI adoption through a leadership lens, recognizing that sustainable outcomes emerge when organizational readiness evolves alongside technology.

Through SmartVision, our AI-driven innovation framework, we work closely with executive, product, and engineering leaders to create a shared understanding of what readiness truly means in the context of Enterprise GenAI. Rather than pushing organizations toward rapid experimentation or premature scale, we focus on helping leadership teams surface structural gaps early across strategy, governance, data, architecture, operating models, and risk.

Our work centers on sequencing AI transformation deliberately. We help organizations strengthen decision ownership, establish AI governance frameworks that enable innovation without compromising trust, stabilize data foundations, and align operating models so AI can be embedded responsibly into core workflows. This approach allows teams to move forward with clarity and scale AI with confidence.

In our experience, organizations that treat AI readiness assessment as an ongoing leadership discipline reduce downstream risk, accelerate time to value, and build more resilient AI systems. AI implementation succeeds when readiness becomes part of how the organization operates, not just a phase in delivery.

Final Thought: Readiness Shapes AI Transformation

Readiness is not a delay.

It is the foundation of every successful AI deployment strategy.

The GenAI Readiness Canvas is not a theoretical framework. It is a practical leadership tool designed to surface risk early, guide investment decisions, and enable AI to scale without eroding trust.

Organizations that treat readiness as an explicit discipline move faster with fewer setbacks. Those that skip it often learn the same lessons later—at significantly higher cost.

Readiness is not a delay.

It is the discipline that allows AI transformation to endure.